Last Updated On : 11-Feb-2026

Salesforce Certified MuleSoft Platform Architect - Mule-Arch-201 Practice Test

Prepare with our free Salesforce Certified MuleSoft Platform Architect - Mule-Arch-201 sample questions and pass with confidence. Our Salesforce-MuleSoft-Platform-Architect practice test is designed to help you succeed on exam day.

Salesforce 2026

Universal Containers sells a total of 100 Products. There are 80 Products that are generally available for selection by all users (General Access). The remaining 20 Products should only be available to a certain group of users (Special Access).

Which Product Selection and Price Book strategy should the admin utilize to meet the requirements?

A. Create one Price Book that contains all 100 Products. Create a Validation Rule on the Quote o(M.ect to prevent selection of a Special Access Product based on the level of User access.

B. Create one Price Book that contains all 100 Products. Create a custom Product field to designate Genera! Access and/or Special Access. Utilize Hidden Search Filters to support dynamic Product visibility based on the level of User access.

C. Create two Price Books: one General Access Price Book with the 80 generally available Products, and one Special Access Price Book with the 20 Special Access Products. Create automation to populate the appropriate Price Book ID Into the SBQQ QuotePricebookld c on the Opportunity.

D. A Create two Price Books: one General Access Price Book with the 80 generally available Products, and one Special Access Price Book with all 100 Products. Use Guided Selling to assign the appropriate Price Book based on the level of User access.

Explanation:

Why B is the best strategy

You need:

- 80 products visible to everyone

- 20 products visible only to a specific user group

- A solution that controls product selection visibility, not just validation after the fact.

In Salesforce CPQ, the right approach is to keep one Price Book (so all users can quote from the same catalog) and control which products appear in the Quote Line Editor using Search Filters / Hidden Search Filters.

With B, you:

- Create a custom field on Product (or Product Option) such as:

Access_Level__c = General or Special

- Create a Hidden Search Filter that dynamically filters products based on user context (for example, user profile, permission set, role, or a “Special Access” flag on the User record).

Hidden Search Filters are designed specifically to:

- Filter product results automatically

- Enforce visibility rules without users needing to choose a filter

- Prevent unauthorized users from even seeing restricted products (best UX and compliance)

Why the other options are weaker

A (Validation Rule) ❌

This doesn’t prevent users from seeing or even selecting the product initially; it only blocks later with an error. Bad UX and not true “visibility restriction.”

C (two price books: 80 and 20) ❌

A quote generally uses one price book. If the “Special” price book contains only the 20 products, special users would lose access to the general 80 (unless you duplicate products across price books, which this option doesn’t do).

D (two price books + guided selling) ❌

Guided Selling helps recommend products, but it’s not the primary mechanism for hard visibility control. Also, maintaining separate price books adds administrative overhead and risk of inconsistent pricing/catalog governance.

A large lending company has developed an API to unlock data from a database server and web server. The API has been deployed to Anypoint Virtual Private Cloud

(VPC) on CloudHub 1.0.

The database server and web server are in the customer's secure network and are not accessible through the public internet. The database server is in the customer's AWS

VPC, whereas the web server is in the customer's on-premises corporate data center.

How can access be enabled for the API to connect with the database server and the web server?

A. Set up VPC peering with AWS VPC and a VPN tunnel to the customer's on-premises corporate data center

B. Set up VPC peering with AWS VPC and the customer's on-premises corporate data center

C. Setup a transit gateway to the customer's on-premises corporate data center through AWS VPC

D. Set up VPC peering with the customer's on-premises corporate data center and a VPN tunnel to AWS VPC

Explanation:

This is a hybrid connectivity scenario where the CloudHub API needs to reach resources in two different private networks:

Database Server in AWS VPC: The cleanest, native AWS method is VPC Peering between the Anypoint VPC (which runs in AWS) and the customer's AWS VPC. This establishes direct, private network connectivity within AWS.

Web Server in On-Premises Data Center: For resources outside AWS, you need a VPN tunnel (or AWS Direct Connect) between the Anypoint VPC and the customer's corporate network. AWS provides VPN Gateway services for this purpose.

These two methods are complementary and necessary to reach both environments from the CloudHub VPC.

Why the Other Options Are Incorrect:

B. VPC peering with AWS VPC and the customer's on-premises data center: VPC peering only works between two AWS VPCs. You cannot peer with an on-premises network. This option incorrectly suggests VPC peering can solve both connections.

C. Setup a transit gateway to the customer's on-premises data center through AWS VPC: A transit gateway could be part of a larger network architecture but is overkill and not the standard, direct answer. It also doesn't address the AWS VPC connection explicitly; it assumes the transit gateway connects everything. The simpler, standard answer is separate peering and VPN.

D. VPC peering with on-premises and VPN to AWS VPC: This reverses the correct methods. You cannot do VPC peering with on-premises. You can do VPN to AWS VPC, but VPC peering is the preferred, more performant solution for AWS-to-AWS connectivity.

Reference:

CloudHub VPC Networking Documentation: Details that to connect to resources in a customer's AWS VPC, you use VPC peering. To connect to on-premises resources, you configure a Site-to-Site VPN from the CloudHub VPC to the customer's network.

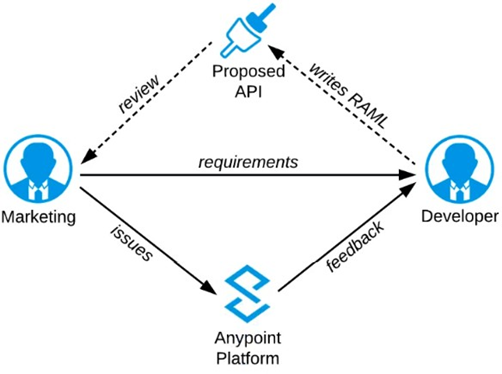

Refer to the exhibit.

A RAML definition has been proposed for a new Promotions Process API, and has been published to Anypoint Exchange.

The Marketing Department, who will be an important consumer of the Promotions API, has important requirements and expectations that must be met.

What is the most effective way to use Anypoint Platform features to involve the Marketing Department in this early API design phase?

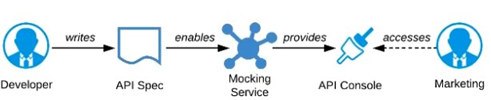

A) Ask the Marketing Department to interact with a mocking implementation of the API using the automatically generated API Console

B) Organize a design workshop with the DBAs of the Marketing Department in which the database schema of the Marketing IT systems is translated into RAML

B) Organize a design workshop with the DBAs of the Marketing Department in which the database schema of the Marketing IT systems is translated into RAML

C) Use Anypoint Studio to Implement the API as a Mule application, then deploy that API implementation to CloudHub and ask the Marketing Department to interact with it

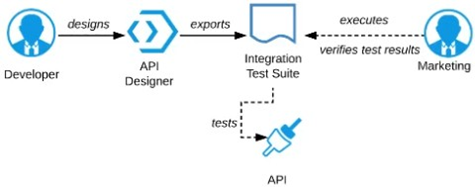

D) Export an integration test suite from API designer and have the Marketing Department execute the tests In that suite to ensure they pass

A. Option A

B. Option B

C. Option C

D. Option D

Explanation:

The Anypoint Platform emphasizes an "API-first" and "design-first" approach to development. This philosophy is specifically designed to involve stakeholders like the Marketing Department (who are typically non-technical API consumers) early in the lifecycle, before any backend code is written.

Mocking Service: When a developer publishes a RAML or OAS definition to Anypoint Exchange, the platform automatically generates documentation (API Console) and a fully functional mocking service.

Early Feedback: The Marketing Department can use this mock service via the API Console interface to simulate real API interactions, test the data models (e.g., "What does a promotion response look like?"), and provide immediate feedback on the design. This rapid feedback loop allows architects and developers to incorporate changes quickly, reducing rework later in the development process.

Analysis of Other Options

B (Design workshop with DBAs): This approach focuses too heavily on the technical, internal database schema rather than the consumer's needs or the public-facing API contract. The Marketing Department managers/users are unlikely to be DBAs, and this method does not use the platform's collaboration features effectively.

C (Implement and deploy to CloudHub): This is expensive and time-consuming. The core benefit of mocking is getting feedback before implementation and deployment.

D (Export integration test suite): The Marketing Department members are business users, not technical testers. Asking them to execute technical test suites is inappropriate and inefficient.

Key References

Anypoint Exchange: Serves as the primary mechanism for sharing API specifications and enabling early collaboration using built-in documentation and mocking capabilities.

API-first design: The methodology promotes designing the API contract first and gathering feedback via mocks to ensure the API meets consumer needs efficiently.

What Mule application can have API policies applied by

Anypoint Platform to the endpoint exposed by that Mule application?

A) A Mule application that accepts requests over HTTP/1.x

B) A Mule application that accepts JSON requests over TCP but is NOT required to provide a response

C) A Mute application that accepts JSON requests over WebSocket

D) A Mule application that accepts gRPC requests over HTTP/2

A. Option A

B. Option B

C. Option C

D. Option D

Explanation:

Anypoint Platform policies (such as Rate Limiting, Client ID Enforcement, OAuth, Spike Control, etc.) are applied through API Manager.

These policies are enforced at the API Gateway layer, which only works with Mule applications that expose endpoints over HTTP/1.x (via the HTTP Listener).

Policies cannot be applied to applications that use TCP, WebSocket, or gRPC over HTTP/2, because those protocols bypass the API Gateway’s policy enforcement mechanism.

❌ Why not the other options?

B. JSON over TCP → Not supported by API Manager policies. TCP endpoints don’t integrate with the API Gateway.

C. JSON over WebSocket → WebSocket connections are not supported for policy enforcement.

D. gRPC over HTTP/2 → MuleSoft does not support applying API Manager policies to gRPC endpoints.

👉 In summary:

Only Mule applications that expose HTTP/1.x endpoints can have API policies applied by Anypoint Platform.

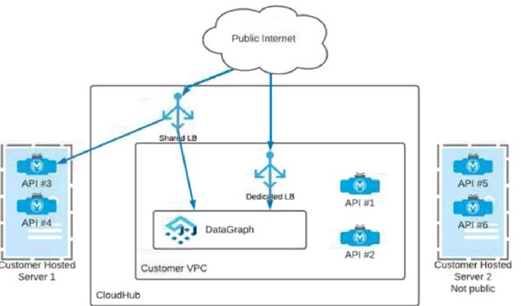

Which APIs can be used with DataGraph to create a unified schema?

A. APIs 1, 3, 5

B. APIs 2, 4 ,6

C. APIs 1, 2, s5, 6

D. APIs 1, 2, 3, 4

Explanation:

Why

To be used in Anypoint DataGraph for building a unified schema, an API must be reachable from the DataGraph runtime. In the exhibit:

API #1 and API #2 are deployed in CloudHub inside the Customer VPC. They can be reached through the Dedicated Load Balancer (DLB) that fronts the VPC-hosted CloudHub apps. That means DataGraph can invoke them securely through the VPC/DLB path.

API #3 and API #4 are on Customer Hosted Server 1, which is shown as publicly reachable (traffic can come from the public internet, and the diagram shows connectivity into the CloudHub side via the shared LB path). Because they are reachable over the network from DataGraph, they can be included in the unified schema.

API #5 and API #6 are on Customer Hosted Server 2 (Not public). Since they are explicitly marked not public and the diagram shows no private connectivity path (no VPN/Direct Connect/VPC peering/Anypoint networking link) from CloudHub/DataGraph to that server, DataGraph would not be able to reach those APIs. If an API can’t be reached, it can’t be used as a DataGraph source.

Why the other options are wrong

A (1,3,5) includes API #5, which is not publicly reachable and has no private route shown → not usable.

B (2,4,6) includes API #6, also not reachable → not usable.

C (1,2,5,6) includes API #5 and #6, neither reachable → not usable.

✅ Therefore, the APIs that can be used with DataGraph in this architecture are APIs 1, 2, 3, and 4 → Option D.

| Page 1 out of 31 Pages |