Last Updated On : 11-Feb-2026

Salesforce Certified MuleSoft Platform Architect - Mule-Arch-201 Practice Test

Prepare with our free Salesforce Certified MuleSoft Platform Architect - Mule-Arch-201 sample questions and pass with confidence. Our Salesforce-MuleSoft-Platform-Architect practice test is designed to help you succeed on exam day.

Salesforce 2026

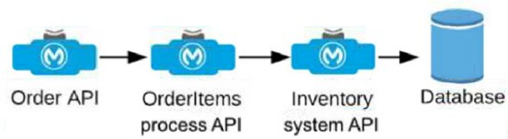

An Order API triggers a sequence of other API calls to look up details of an order's items in a back-end inventory database. The Order API calls the OrderItems process API, which calls the Inventory system API. The Inventory system API performs database operations in the back-end inventory database.

The network connection between the Inventory system API and the database is known to be unreliable and hang at unpredictable times.

Where should a two-second timeout be configured in the API processing sequence so that the Order API never waits more than two seconds for a response from the Orderltems process API?

A. In the Orderltems process API implementation

B. In the Order API implementation

C. In the Inventory system API implementation

D. In the inventory database

Explanation:

The SLA Requirement: The requirement is that the Order API never waits more than two seconds for a response from the OrderItems Process API.

Controlling the Wait Time: To guarantee this, the Order API must set a response timeout on its outbound HTTP request to the OrderItems Process API.

Point of Enforcement: In MuleSoft, the HTTP Request connector is where you specify how long the current flow should block while waiting for a response. Setting this to 2,000 ms (2 seconds) in the Order API ensures that if the downstream chain (Process API → System API → Database) hangs, the Order API will stop waiting and can take corrective action (like returning an error or a fallback response).

Downstream Issues: Since the connection between the System API and the database is known to be unreliable and "hangs," any timeout set further down the chain (e.g., in the System API) would only help the System API, not the Order API. If the System API's timeout is higher than 2 seconds, the Order API would still be left waiting beyond its limit.

🔴 Incorrect Answers

A. In the OrderItems Process API: Configuring a timeout here would control how long the Process API waits for the System API. It does not prevent the Order API from waiting indefinitely if the Process API itself hangs or has a longer timeout.

C. In the Inventory System API: This would protect the System API from a hanging database, but it does not account for potential latency or hangs within the OrderItems Process API layer.

D. In the inventory database: Database timeouts are a last resort. While good for resource management on the DB server, they are often set to much longer durations (e.g., 30–60 seconds) and cannot guarantee the specific 2-second SLA required by the top-level Order API.

📚 Reference

MuleSoft Documentation: HTTP Request Connector Response Timeout

Key Concept:

Fail Fast. In a distributed architecture, each consumer is responsible for its own uptime and performance. By setting a timeout at the "top" of the chain (Order API), you ensure that the end-user experience is protected from downstream unreliability.

An API implementation is deployed on a single worker on CloudHub and invoked by external API clients (outside of CloudHub). How can an alert be set up that is guaranteed to trigger AS SOON AS that API implementation stops responding to API invocations?

A. Implement a heartbeat/health check within the API and invoke it from outside the Anypoint Platform and alert when the heartbeat does not respond

B. Configure a "worker not responding" alert in Anypoint Runtime Manager

C. Handle API invocation exceptions within the calling API client and raise an alert from that API client when the API Is unavailable

D. Create an alert for when the API receives no requests within a specified time period

Explanation:

To ensure an alert triggers guaranteed and as soon as the API stops responding to external clients, an end-to-end monitoring approach is required.

End-to-End Monitoring: External clients access the API through multiple layers, including the Global/Shared Load Balancer (SLB), the Anypoint Service Mesh, or specific networking configurations. A "heartbeat" or health-check endpoint invoked from outside the platform monitors the entire path. If the network, load balancer, or the application itself fails, the external monitor will detect the failure immediately.

Guaranteed Responsiveness: Standard internal platform alerts, like "Worker not responding," rely on an internal "ping" mechanism. CloudHub pings the worker every 30 seconds, and it takes three consecutive failed pings (90 seconds) before an alert is even triggered. This delay means it is not "as soon as" the failure occurs compared to an external probe that can be configured with higher frequency or immediate failure detection.

Analysis of Other Options

B. "Worker not responding" alert: While this is a standard out-of-the-box feature, it only monitors the worker's health, not the end-to-end connectivity. Furthermore, it has a built-in latency of at least 90 seconds due to the triple-ping requirement.

C. Exception handling in the client: This is technically feasible but considered poor architectural practice. It places the burden of monitoring on the consumer and is difficult to maintain if there are dozens or hundreds of different API clients.

D. Alert for no requests: This is unreliable because a lack of requests does not necessarily mean the API is down; it could simply mean there is no traffic from the clients at that time.

Key Exam Takeaway:

For the Platform Architect exam, when a question asks for a "guaranteed" way to monitor availability from the perspective of an external client, the recommended solution is often an external health check (synthetic monitoring) to cover the entire request-response path.

A manufacturing company has deployed an API implementation to CloudHub and has not configured it to be automatically restarted by CloudHub when the worker is

not responding.

Which statement is true when no API Client invokes that API implementation?

A. No alert on the API invocations and APT implementation can be raised

B. Alerts on the APT invocation and API implementation can be raised

C. No alert on the API invocations is raised but alerts on the API implementation can be raised

D. Alerts on the API invocations are raised but no alerts on the API implementation can be raised

Explanation:

This question tests understanding of the different types of alerts in Anypoint Runtime Manager and their triggering mechanisms.

Key Points:

Two Alert Categories:

- API Invocation Alerts: These are based on traffic/analytics metrics like high latency, error rate, or low traffic. They are triggered by data from actual API requests. If no client calls the API, there are no metrics to analyze, so these alerts cannot fire.

- API Implementation (Worker) Alerts: These are infrastructure/health checks, like the "Worker Not Responding" alert. Runtime Manager actively probes the Mule runtime process (via a health endpoint) at regular intervals, regardless of whether clients are sending traffic. If the worker process is dead or unresponsive, this alert will fire.

Scenario Context: The API is not configured for auto-restart, but that doesn't affect alerting. The key condition is "no API Client invokes that API." This means:

- No traffic → No API invocation metrics → No API Invocation alerts.

- Runtime Manager still performs health checks → Worker Not Responding alert can still be raised if the process fails.

Therefore, infrastructure alerts are possible, but traffic-based alerts are not.

Why the Other Options Are Incorrect:

A. No alert... can be raised: Incorrect. The Worker Not Responding alert is independent of client traffic and can still be raised.

B. Alerts... can be raised: This is partially true but overly broad. It incorrectly suggests that API invocation alerts can be raised without any traffic, which is false.

D. Alerts on API invocations are raised...: This is false. API invocation alerts require actual request data to analyze. No traffic means no data, so no alerts.

Reference:

Anypoint Monitoring & Alerting Documentation: Distinguishes between:

- Application/API Alerts: Based on metrics like "Request Count," "Error Rate," "Latency." These require traffic.

- Worker/Server Alerts: Based on infrastructure health checks like "Application Not Responding." These are proactive pings from the platform.

An organization wants to create a Center for Enablement (C4E). The IT director schedules a series of meetings with IT senior managers.

What should be on the agenda of the first meeting?

A. Define C4E objectives, mission statement, guiding principles, a

B. Explore API monetization options based on identified use cases through MuleSoft

C. A walk through of common-services best practices for logging, auditing, exception handling, caching, security via policy, and rate limiting/throttling via policy

D. Specify operating model for the MuleSoft Integrations division

Explanation:

✅ Why this is the correct answer

When establishing a Center for Enablement (C4E), the very first step is to align leadership around purpose, vision, and guiding principles. Before discussing tooling, operating models, or technical practices, the organization must first agree on:

- Why the C4E exists

- What business outcomes it supports

- How it will enable teams (rather than control or block them)

- What success looks like

This foundational alignment ensures that all future decisions—technical, organizational, and operational—are made consistently and strategically.

A C4E is not just a technical team; it is a governance and enablement model that influences culture, delivery velocity, and reuse across the organization.

❌ Why the other options are not first-step activities

B. Explore API monetization options

Monetization is an advanced maturity topic. Before monetizing APIs, the organization must first:

- Build reusable assets

- Establish governance

- Enable adoption across teams

It’s not a starting point.

C. Walkthrough of technical best practices

While important, this is too tactical for the first meeting. Best practices (logging, security, throttling, etc.) should come after alignment on vision and goals.

D. Specify the operating model for MuleSoft integrations

Defining an operating model is important, but it should come after agreement on goals, scope, and principles. Otherwise, the operating model risks being misaligned with business objectives.

🧠 Key takeaway

The first responsibility of a C4E is to align leadership on why the organization is adopting API-led connectivity and what success looks like — not to dive into tools or technical execution.

✅ Final answer: A

A large organization with an experienced central IT department is getting started using MuleSoft. There is a project to connect a siloed back-end system to a new

Customer Relationship Management (CRM) system. The Center for Enablement is coaching them to use API-led connectivity.

What action would support the creation of an application network using API-led connectivity?

A. Invite the business analyst to create a business process model to specify the canonical data model between the two systems

B. Determine if the new CRM system supports the creation of custom: REST APIs, establishes 4 private network with CloudHub, and supports GAuth 2.0 authentication

C. To expedite this project, central IT should extend the CRM system and back-end systems to connect to one another using built in integration interfaces

D. Create a System API to unlock the data on the back-end system using a REST API

Explanation:

API-led connectivity is MuleSoft's recommended approach to building an application network, which promotes reusability, scalability, and decoupling of systems by organizing integrations into three layers:

- System APIs: Unlock and expose data from underlying (often siloed) core systems in a secure, standardized way (typically as RESTful services). These APIs abstract the complexities of backend systems and provide a clean interface for data access.

- Process APIs: Orchestrate and transform data from multiple System APIs to support business processes.

- Experience APIs: Tailor data for specific consumer channels (e.g., mobile, web).

In this scenario, the project involves connecting a siloed back-end system to a new CRM. The first step in API-led connectivity is to create a System API that exposes the back-end system's data via REST. This unlocks the siloed data for reuse, allowing future integrations (including the CRM) to consume it without point-to-point connections, thereby starting to build a reusable application network.

Why not the other options?

A: Involving a business analyst in modeling is useful for defining requirements or canonical models later (often at the Process/Experience layer), but it is not the primary action to unlock siloed data and begin the application network.

B: Evaluating the CRM's capabilities (custom REST APIs, private networking with CloudHub, OAuth 2.0) is a technical consideration for security/deployment, but it does not directly support creating the application network via API-led principles.

C: Directly extending/connecting the systems using built-in interfaces is a traditional point-to-point integration, which API-led connectivity explicitly avoids as it creates tight coupling, reduces reusability, and hinders building a scalable application network.

References:

This aligns with MuleSoft's official guidance on API-led connectivity, where System APIs are the foundation for unlocking core systems (see MuleSoft documentation on API layers and application networks). The Center for Enablement (C4E) coaches organizations to adopt this layered, reusable approach rather than expedited point-to-point solutions.

| Salesforce-MuleSoft-Platform-Architect Exam Questions - Home |

| Page 2 out of 31 Pages |