Salesforce-Platform-Developer-II Practice Test

Updated On 1-Jan-2026

202 Questions

Universal Containers wants to use a Customer Community with Customer Community Plus licenses to allow their customers access to track how many containers they have rented and when they are due back. Universal Containers uses a Private sharing model for External users, Many of their customers are multi-national corporations with complex Account hierarchies. Each account on the hierarchy represents a department within the same business, One of the requirements is to allow certain community users within the same Account hierarchy to see several departments’ containers, based on a custom junction object that relates the Contact to the various Account records that represent the departments. Which solution solves these requirements?

A. A Visualforce page that uses a custom controller that specifies without sharing [0 expose the records

B. An Apex trigger that creates Apex managed sharing records based on the junction object's relationships

C. A Lightning web component on the Community Home Page that uses Lightning Data Services.

D. A custom list view on the junction object with filters that will show the proper records based on owner

Explanation:

To address Universal Containers' requirement of allowing certain community users with Customer Community Plus licenses to track rented containers and their due dates across multiple departments within the same Account hierarchy, while using a Private sharing model for external users, we need a solution that respects the complex Account hierarchies and leverages a custom junction object to define visibility. Let’s evaluate the options based on Salesforce sharing mechanics, community user permissions, and the described use case.

✔ Universal Containers uses a Private sharing model for external users, meaning community users can only see records they own or are explicitly shared with, unless additional sharing rules or mechanisms are implemented.

✔ The Account hierarchy represents departments within a multi-national corporation, and a custom junction object (e.g., Contact_Account_Junction__c) relates Contacts to various Account records, indicating which departments’ containers a user can access.

✔ Customer Community Plus licenses provide limited access (e.g., read-only to related records), so the solution must extend visibility beyond ownership without violating security.

A. A Visualforce page that uses a custom controller that specifies without sharing to expose the records.

This approach uses a Visualforce page with a custom controller marked without sharing, which ignores the organization’s sharing rules and exposes all records the Apex code can access (based on the running user’s permissions). While this could retrieve container records across departments, it bypasses the Private sharing model entirely, granting uncontrolled access to all data the controller can query. This is a security risk and not a scalable solution for managing visibility based on the junction object, making it incorrect.

B. An Apex trigger that creates Apex managed sharing records based on the junction object's relationships.

This solution involves an Apex trigger on the junction object (e.g., Contact_Account_Junction__c) or a related object (e.g., Container__c) that creates Apex managed sharing records. The trigger can query the junction object to determine which Accounts (departments) a Contact is related to, then generate Container__c sharing records (via the Container__Share object) to grant read access to the associated community user. This respects the Private sharing model, allows granular control based on the hierarchy and junction relationships, and is supported for Customer Community Plus users with proper permission configuration (e.g., “View All Data” or custom sharing rules). This is the most appropriate and secure solution.

C. A Lightning web component on the Community Home Page that uses Lightning Data Services.

Lightning Data Service (LDS) enables record access and caching in LWC, but it adheres to the user’s sharing rules. In a Private sharing model, LDS would only return records the community user owns or is shared with, unless additional sharing is configured. Without a mechanism to extend visibility based on the junction object and Account hierarchy, LDS alone cannot meet the requirement. This option is insufficient without pairing it with a sharing solution.

D. A custom list view on the junction object with filters that will show the proper records based on owner.

A custom list view on the junction object can filter records, but it is limited to the logged-in user’s permissions and ownership. Community users with Customer Community Plus licenses cannot see records outside their ownership or sharing scope, and list views do not dynamically adjust visibility based on Account hierarchies or junction relationships. This approach does not solve the cross-department visibility requirement, making it incorrect.

Correct Answer: B. An Apex trigger that creates Apex managed sharing records based on the junction object's relationships.

Reason:

➟ The Apex trigger can efficiently create sharing records to grant access to Container__c records associated with related Accounts, leveraging the junction object’s relationships.

➟ This solution maintains the Private sharing model’s security while extending visibility to departments within the Account hierarchy, aligning with the multi-national corporation’s structure.

➟ It supports Customer Community Plus users by ensuring they only see authorized records, configurable via the junction object.

Implementation Notes:

➜ Create a trigger on Container__c or the junction object to query Contact_Account_Junction__c for the current user’s Contact and related Accounts.

➜ Use Apex managed sharing to insert Container__Share records with ParentId (Container), UserOrGroupId (Contact’s User), and AccessLevel (Read).

➜ Ensure the junction object has fields like Contact__c and Account__c, and configure sharing rules or permissions to support the trigger.

➜ Test with a hierarchy and multiple departments in a sandbox to verify access.

Reference: Salesforce Security Guide - Apex Managed Sharing, Salesforce Community Guide - Experience Cloud User Licenses

.

A developer is tasked with ensuring that email addresses entered into the system for Contacts and for a custom object called survey Response c do not belong to a list of blocked domains. The list of blocked domains is stored in a custom object for ease of maintenance by users. The survey Response c object is populated via a custom Visualforce page. What is the optimal way to implement this?

A. Implement the logic in validation rules on the Contact and the Burvey Response_c Objects.

B. Implement the logic in a helper class that is called by an Apex trigger on Contact and from the custom Visualforce page controller.

C. Implement the logic in an Apex trigger on Contact and also implement the logic within the custom Visualforce page controller.

D. Implement the logic in the custom Visualforce page controller and call "that method from an Apex trigger on Contact.

Explanation:

To solve the problem of blocking email addresses from specific domains for both standard and custom objects, while maintaining a centralized, reusable solution, we need an approach that ensures consistency, scalability, and maintainability. Since the list of blocked domains is maintained in a custom object, the implementation must dynamically validate emails against that list across both Apex triggers and Visualforce pages.

✅ Correct Answer: B. Implement the logic in a helper class that is called by an Apex trigger on Contact and from the custom Visualforce page controller.

This approach promotes code reuse and centralized validation logic. A helper class acts as a single source of truth for blocked domain checks, which both the Contact trigger and the Visualforce page controller can invoke.

✔ For the Contact object, the Apex trigger ensures that email validation occurs regardless of the data entry method (UI, API, etc.).

✔ For Survey_Response__c, the Visualforce page uses a controller which can call the same helper method before insert/update.

✔ This avoids code duplication, improves maintainability, and ensures consistent business logic enforcement.

Example logic includes querying the blocked domain list in the helper class, parsing the domain from the email, and validating accordingly.

❌ A. Implement the logic in validation rules on the Contact and the Survey_Response__c objects.

Validation rules are declarative and fast, but they can't query custom objects, which is necessary to dynamically check against the blocked domains list. Since the domains list is stored in a custom object, validation rules alone are not sufficient or even capable of performing this logic.

❌ C. Implement the logic in an Apex trigger on Contact and also implement the logic within the custom Visualforce page controller.

This introduces duplicate logic in two separate places, making maintenance harder and risking inconsistent behavior over time. Any change to the logic would need to be updated in multiple places, which increases the likelihood of errors.

❌ D. Implement the logic in the custom Visualforce page controller and call that method from an Apex trigger on Contact.

Calling a controller method from a trigger is not feasible in Apex. Triggers do not have access to Visualforce page controllers or UI-bound logic. This breaks the logical separation between the server-side business logic and the UI controller, which is not allowed in Apex.

📝 Reference:

Apex Triggers Best Practices

Helper Class Pattern

Calling Shared Logic from Triggers and Controllers

✅ Final Answer:

B. Implement the logic in a helper class that is called by an Apex trigger on Contact and from the custom Visualforce page controller.

A Salesforce org has more than 50,000 contacts. A new business process requires a

calculation that aggregates data from all of these contact records. This calculation needs to

run once a day after business hours.

which two steps should a developer take to accomplish this?

(Choose 2 answers)

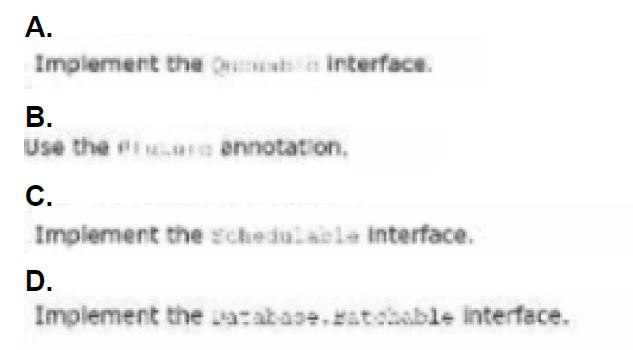

A. Option A

B. Option B

C. Option C

D. Option D

D. Option D

Explanation:

To address the requirement of running a daily aggregation process on more than 50,000 Contact records, we must ensure the solution handles large data volumes and supports scheduled execution. Here's a breakdown of each option:

✅ Correct Answers: C and D

✅ C. Implement the Schedulable interface.

Implementing the Schedulable interface allows developers to define classes that can be scheduled to run at specific times using either the Apex Scheduler UI or System.schedule() method in code. This is essential for running jobs after business hours automatically, without manual intervention.

✔ It ensures the business logic can run in a controlled, scheduled manner.

✔ Combined with batch processing, this will support high-volume data (like 50,000+ contacts).

✔ Scheduling is important because you can define cron expressions to run it nightly.

📘 Ref: Schedulable Interface Documentation

✅ D. Implement the Database.Batchable interface.

The Database.Batchable interface enables Apex to process large data volumes asynchronously in manageable chunks (up to 50 million records). Since 50,000+ contacts exceed the synchronous governor limits, batch processing is ideal.

✔ Handles large datasets by breaking them into 200-record chunks (or a size you specify).

✔ Reduces risk of hitting limits and allows finer control over logic execution per batch.

✔ Must be used when iterating over large datasets to avoid hitting SOQL or CPU time limits.

📘 Ref: Batch Apex Documentation

❌ A. Implement the Queueable interface.

Queueable Apex is great for chained and asynchronous execution, but it still operates under synchronous execution limits and is not well-suited for large data sets beyond ~10,000 records.

➟ Cannot handle 50,000+ contacts unless manually broken into jobs, which is complex.

➟ Also lacks direct scheduling; you'd need another mechanism to trigger it daily.

❌ B. Use the Future annotation.

The @future annotation enables asynchronous processing but:

➟ It has strict limitations (like no return types, no chaining).

➟ Not suitable for large-volume record processing, due to limits like 50 future calls per transaction.

➟ Cannot be scheduled and isn’t designed for long-running or scheduled operations.

✅ Final Answer:

C. Implement the Schedulable interface.

D. Implement the Database.Batchable interface.

These two combined provide a scalable, automated, and governor-safe approach to executing large-scale, time-bound logic.

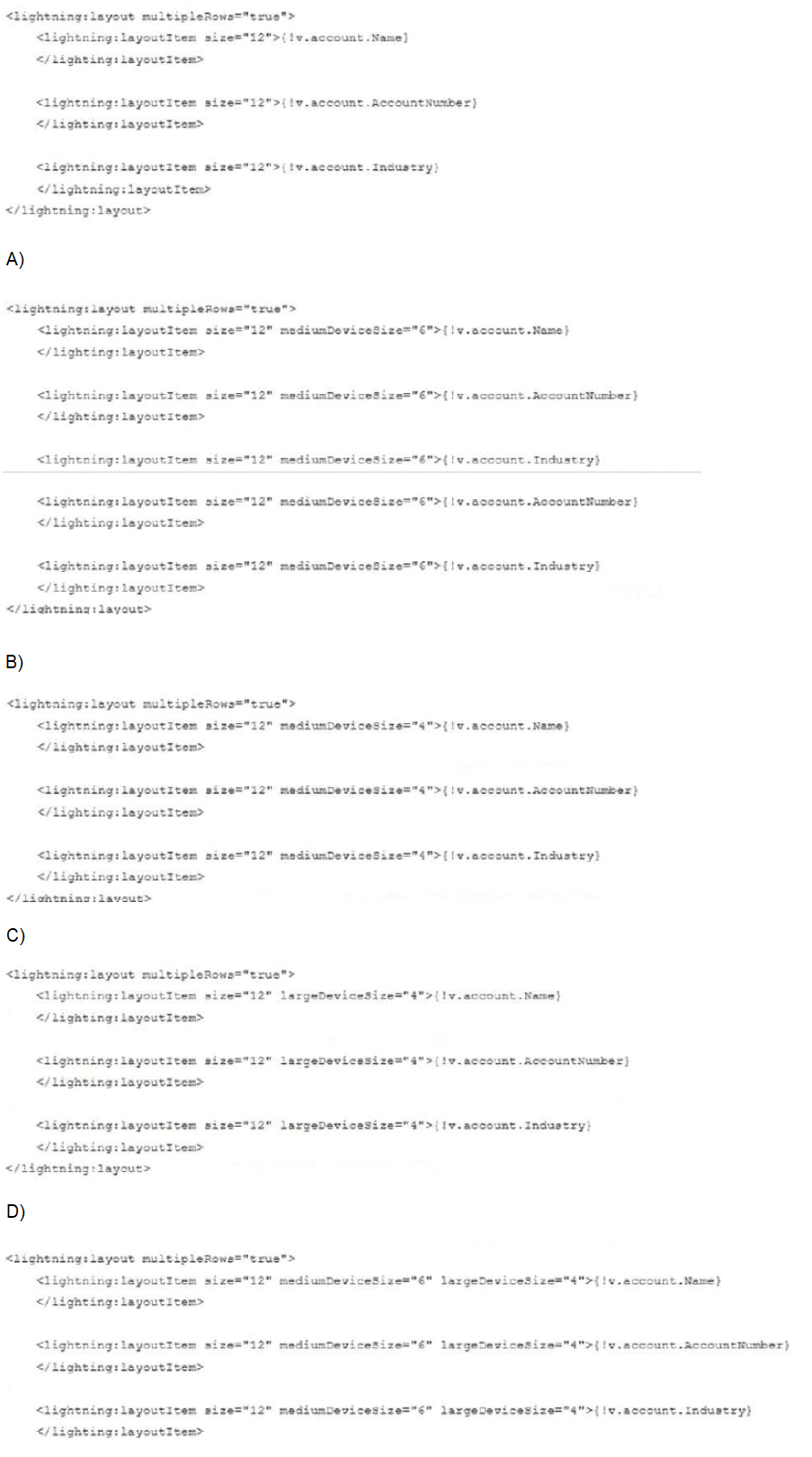

Refer to the component code and requirements below:

A. Option A

B. Option B

C. Option C

D. Option D

Explanation:

🧠 Understanding the Requirement:

From the structure of the base code snippet, the component is rendering 3 account fields (Name, AccountNumber, Industry) using

The goal is to optimize the display across different screen sizes (especially medium and large screens) by possibly breaking up full-width fields into half-width or one-third width segments when space allows.

🔎 Option Analysis:

✅ D. (Correct Answer)

size="12" ensures full width on small devices.

mediumDeviceSize="6" means two fields side-by-side on tablets.

largeDeviceSize="4" places three fields side-by-side on large screens (desktop).

This is mobile-first, responsive design, giving the best user experience on all device sizes.

This layout evenly distributes fields and avoids duplication (unlike Option A), while adapting smartly to device size.

❌ A.

This code repeats fields (AccountNumber and Industry are listed twice).

Although it uses mediumDeviceSize="6", the duplication adds confusion and is likely unintended.

Not efficient; adds unnecessary DOM elements and can lead to inconsistent behavior or confusion for users.

Fails to meet the clean, responsive design goal.

❌ B.

Uses mediumDeviceSize="4" for each of the 3 fields.

That’s fine on medium screens, but with 3 items each set to 4, it totals 12, so they fit on one row — good.

However, it lacks largeDeviceSize, so large screen optimization is missed.

This would look okay on tablets but may appear too spread on desktops and is not fully responsive.

❌ C.

Similar to B, but only uses largeDeviceSize="4".

Missing mediumDeviceSize, so behavior on tablets is unclear and may default to stacking fields.

Not mobile-optimized either since it only relies on size="12" and largeDeviceSize.

Incomplete responsiveness across all device classes.

📚 Reference:

Salesforce Lightning Layout Documentation

size applies to small devices, mediumDeviceSize for tablets, largeDeviceSize for desktops.

Best practice: Mobile-first design with progressive enhancement using device-specific sizes.

A developer is debugging an Apex-based order creation process that has a requirement to have three savepoints, SP1, SP2, and 5P3 {created in order), before the final execution of the process. During the final execution process, the developer has a routine to roll back to SP1 for a given condition. Once the condition is fixed, the code then calls 2 roll back to SP3 to continue with final execution. However, when the roll back to SP3 is called, a Funtime error occurs. Why does the developer receive a runtime error?

A. SP3 became invalid when SP1 was rolled back.

B. The developer has too many DML statements between the savepoints.

C. The developer used too many savepoints in one trigger session.

D. The developer should have called SF2 before calling SP3.

Explanation:

The issue arises because a runtime error occurs when the developer attempts to roll back to savepoint SP3 after previously rolling back to SP1 during an Apex-based order creation process. The process involves three savepoints—SP1, SP2, and SP3—created in that order, with a rollback to SP1 under a specific condition, followed by an attempt to roll back to SP3 after fixing the condition. Let’s analyze why this error happens based on Salesforce’s savepoint and transaction management rules.

✔ Salesforce allows savepoints to manage transaction boundaries, enabling partial rollbacks within a single transaction.

✔ A savepoint marks a point in the transaction that can be rolled back to, discarding changes made after that point.

✔ When a rollback occurs to an earlier savepoint (e.g., SP1), all savepoints created after it (e.g., SP2, SP3) become invalid because the transaction state reverts to the earlier point.

✔ The runtime error likely stems from attempting to use an invalid savepoint (SP3) after rolling back to SP1.

A. SP3 became invalid when SP1 was rolled back.

This is correct. In Salesforce, when a rollback is performed to an earlier savepoint (e.g., SP1), all subsequent savepoints (SP2 and SP3) are invalidated. Attempting to roll back to SP3 after rolling back to SP1 triggers a runtime error (e.g., System.SavepointException: Savepoint 'SP3' is invalid and cannot be used) because SP3 no longer exists in the transaction context. This explains the developer’s issue.

B. The developer has too many DML statements between the savepoints.

This is incorrect. Salesforce does not impose a specific limit on the number of DML statements between savepoints, only the overall governor limit of 150 DML statements per transaction. The error is tied to savepoint invalidation, not DML count, so this is not the cause.

C. The developer used too many savepoints in one trigger session.

This is incorrect. Salesforce allows up to 35 savepoints per transaction, and three savepoints (SP1, SP2, SP3) are well within this limit. The error is not due to exceeding the savepoint limit but rather the invalidation of SP3 after rolling back to SP1.

D. The developer should have called SP2 before calling SP3.

This is incorrect. The sequence of savepoints (SP1, SP2, SP3) is fixed by their creation order, and rolling back to SP2 before SP3 is not a valid requirement. The problem is that SP3 is invalid after the SP1 rollback, regardless of SP2’s state.

Correct Answer: A. SP3 became invalid when SP1 was rolled back.

Reason: The runtime error occurs because rolling back to SP1 invalidates all later savepoints, including SP3. After the rollback to SP1, the transaction state is reset to that point, and any attempt to roll back to SP3 fails because it no longer exists. To fix this, the developer should avoid rolling back to an earlier savepoint if subsequent rollbacks to later savepoints are needed, or restructure the logic to use a single rollback path (e.g., roll back to SP2 or SP3 directly based on conditions).

Additional Notes: The developer could modify the process to:

✔ Use conditional logic to roll back to the appropriate savepoint (e.g., SP2 or SP3) without reverting to SP1 if SP3 is still needed.

✔ Implement a single transaction flow with nested try-catch blocks to handle errors without invalidating all savepoints.

✔ Test the rollback sequence in a sandbox with debug logs to confirm the transaction state.

| Salesforce-Platform-Developer-II Exam Questions - Home | Previous |

| Page 4 out of 41 Pages |