Salesforce-Platform-Developer-II Practice Test

Updated On 1-Jan-2026

202 Questions

How can a developer efficiently incorporate multiple JavaScript libraries in an Aura component?

A. Use JavaScript remoting and script tags.

B. Use CDNs with script attributes.

C. Implement the libraries in separate helper files.

D. Join multiple assets from a static resource.

Explanation:

To determine the most efficient way for a developer to incorporate multiple JavaScript libraries in an Aura component, we must consider performance, organization, and Salesforce best practices regarding asset loading in the Lightning framework.

✅ Correct Answer: D. Join multiple assets from a static resource.

The most efficient and recommended approach in Aura components is to combine (join) multiple JavaScript libraries into a single static resource, then include that resource in the component. This method reduces the number of HTTP requests, improves performance, and ensures the libraries are available in the secure, controlled Salesforce environment.

Developers can use tools like Webpack or Gulp to bundle libraries (e.g., jQuery, Chart.js, Moment.js) into one minified file and upload it as a .zip file or single JS file to Static Resources. Then, in the Aura component, it is loaded using

ltng:require scripts="{!$Resource.myBundledLibs}" afterScriptsLoaded="{!c.onLibsLoaded}"

This ensures that all necessary libraries are loaded efficiently and securely before the component executes its logic.

🔗 Reference: Salesforce Docs – Introducing Aura Components

❌ A. Use JavaScript remoting and script tags.

This option is misleading. JavaScript remoting is a way to call Apex methods asynchronously from JavaScript, not for loading libraries. Also, using raw <script> tags to load external libraries in Aura components violates Locker Service security policies and is strongly discouraged.

❌ B. Use CDNs with script attributes.

Loading libraries directly from CDNs (Content Delivery Networks) via script attributes is possible in raw HTML, but not secure or reliable in Lightning components, especially due to Locker Service and Content Security Policy (CSP) restrictions. Salesforce blocks or limits external script loading from untrusted domains for security reasons.

❌ C. Implement the libraries in separate helper files.

Helper files in Aura components are meant for custom application logic, not for including external libraries. You cannot just "implement" third-party JS libraries as helper files. This approach is incorrect and inefficient, especially for large libraries.

✅ Final Verdict:

D. Join multiple assets from a static resource is the correct and efficient solution for bundling multiple JS libraries in an Aura component, ensuring proper load order, performance, and compatibility with Salesforce security frameworks.

Consider the following code snippet:

A. Implement the without sharing keyword in the searchFeaturs Apex class.

B. Implement the seeAllData=true attribute in the @1sTest annotation.

C. Enclose the method call within Test. startbest i) and @Test_stopTast |).

D. Implement the setFixedSearchResult= method in the test class.

Explanation:

Understanding the Problem

You’re dealing with a failing test class in Salesforce Apex where a SOSL search isn’t returning data, causing an assertion to fail. The test checks a method called searchRecords in a class named searchFeature. This method uses SOSL to search for a query term ('Test') across all fields of Account, Opportunity, and Lead objects, returning a List

Why the Test Fails

In Apex, test methods run in isolation, meaning they don’t see an organization’s real data unless explicitly allowed (e.g., with seeAllData=true). Since the @TestSetup method is empty, no test records exist when the SOSL query runs. Normally, a SOSL query like FIND 'Test' RETURNING Account, Opportunity, Lead returns a list with three inner lists—one for each object type—each empty if no matches are found. So, records.size() should be 3 (the number of object types), and the assertion System.assertNotEquals(records.size(), 0) should pass, since 3 ≠ 0.

But the problem states the assertion fails, suggesting records.size() is 0 or something else is wrong. In test contexts, SOSL behavior can differ. Without test data or specific configuration, SOSL might not behave as expected. Salesforce documentation notes that SOSL in tests returns no results unless you use Test.setFixedSearchResults() to mock the results. If no fixed results are set, it may return an empty list ([]) rather than a list of empty lists ([[], [], []]), making records.size() 0, which fails the assertion.

Alternatively, the test might intend to verify that actual records are found (inner lists aren’t empty), but the assertion only checks records.size(). Since the problem specifies the assertion is System.assertNotEquals(records.size(), 0) and it’s failing, the most likely issue is that SOSL returns no data in the test context without proper setup.

Evaluating the Options

Let’s examine the four options to find the best fix:

Option A: Implement the without sharing keyword in the searchFeature class

What it does: The without sharing keyword makes the class ignore user sharing rules, running in system context.

Impact: This affects visibility of existing records based on permissions. However, in a test with no data (due to an empty @TestSetup), there are no records to see, regardless of sharing. This won’t create data or make SOSL return results.

Conclusion: This doesn’t solve the problem.

Option B: Implement the seeAllData=true attribute in the @isTest annotation

What it does: Adding @isTest(seeAllData=true) lets the test access all data in the Salesforce org.

Impact: If the org has Account, Opportunity, or Lead records with 'Test' in any field, the SOSL query might find them, populating records with non-empty inner lists. Since records.size() would still be 3 (one list per object type), the assertion could pass if data exists. However, this relies on unpredictable org data, making the test brittle and non-portable—bad practice in Apex testing.

Conclusion: This works only if suitable data exists, but it’s unreliable and discouraged.

Option C: Enclose the method call within Test.startTest() and Test.stopTest()

What it does: These methods mark the test’s main execution block, resetting governor limits and ensuring asynchronous code completes before assertions.

Impact: This is good practice for testing, especially with asynchronous operations, but it doesn’t provide data or alter SOSL behavior. With no test records, the SOSL query still finds nothing.

Conclusion: This doesn’t address the data issue.

Option D: Implement the setFixedSearchResults method in the test class

What it does: Test.setFixedSearchResults() lets you define which records SOSL returns in a test by passing an array of record IDs.

Impact: You can create test records (e.g., an Account, Opportunity, and Lead with 'Test' in their fields), insert them, and pass their IDs to setFixedSearchResults(). When searchRecords('Test') runs, SOSL returns these records, ensuring records has three inner lists, some non-empty. Since records.size() is 3, the assertion passes. This keeps the test self-contained and reliable, aligning with Apex best practices.

Conclusion: This directly fixes the issue by providing controlled test data.

Choosing the Best Solution

Option A fails because it doesn’t create data.

Option B might work but depends on org data, which is unreliable and against testing principles.

Option C is irrelevant to the data problem.

Option D ensures the SOSL query returns results by mocking them, making the test pass consistently without external dependencies.

Option D is the clear winner. It leverages Test.setFixedSearchResults(), a standard tool for testing SOSL in Apex, ensuring the test controls what SOSL returns. You’d typically:

Create test records in the test method or @TestSetup.

Set their IDs as fixed search results.

Run the SOSL query and assert the results.

This approach confirms records.size() is 3 and allows further checks (e.g., inner list contents), though the given assertion only requires a non-zero size.

Final Recommendation

The best way to fix the failing test is to implement the setFixedSearchResults method in the test class (Option D). This ensures the SOSL query returns predefined records, making the assertion System.assertNotEquals(records.size(), 0) pass reliably in the isolated test environment.

A developer has working business logic code, but sees the following error in the test class:

You have uncommitted work pending. Please commit or rollback before calling out.

What is a possible solution?

A. Call support for help with the target endpoint, as It is likely an external code error

B. Use mast. T=RunningTes={} before making the callout to bypass it in test execution,

C. Set seeAllData to true the top of the test class, since the code does not fall in practice.

D. Rewrite the business logic and test classes with @Testvisible set on the callout.

Explanation:

✅ B. Use mast. T=RunningTes={} before making the callout to bypass it in test execution

This is the correct solution. In test classes, Salesforce does not allow real callouts. To simulate a callout, developers must use the Test.setMock() method, which replaces the actual HTTP callout with a mocked response. This helps to avoid the “uncommitted work pending” error, especially when DML operations happen before the callout. The mock class must implement HttpCalloutMock to return a fake HttpResponse. This is standard best practice for writing unit tests that include callouts.

🔗 Reference: Salesforce Docs – Test.setMock() and HTTP Callouts

❌ A. Call support for help with the target endpoint, as It is likely an external code error

This is not a valid solution. The error message “You have uncommitted work pending” is generated by the Salesforce platform, not the external endpoint. It happens because a DML operation (like an insert or update) was performed before a callout, which violates Salesforce’s internal execution rules. The issue is not external, so contacting Salesforce or an external API provider won’t help. You must resolve this in your Apex logic by either reordering the operations or mocking the callout in a test environment.

🔗 Reference: Salesforce Developer Forums – Uncommitted Work Pending Error

❌ C. Set seeAllData to true the top of the test class, since the code does not fall in practice.

This is an incorrect workaround. The seeAllData=true annotation in test classes allows access to the org’s real data but has no relation to callouts or DML operations. Enabling this flag does not suppress or solve the uncommitted work issue. It's also discouraged because it makes tests unreliable and dependent on real data. The proper fix is to use Test.setMock() to simulate the callout and avoid the DML-before-callout violation altogether.

🔗 Reference: Salesforce Docs – Test Classes and seeAllData

❌ D. Rewrite the business logic and test classes with @Testvisible set on the callout.

This is also not a valid solution. The @TestVisible annotation is used to expose private members or methods in Apex classes to test methods, allowing you to test logic that is normally inaccessible. However, it does nothing to fix callout-related errors or execution order issues like “uncommitted work pending.” Adding @TestVisible to a method does not bypass the restriction of DML before callout. You still need to use mocking techniques to ensure the callout doesn’t actually execute during testing.

🔗 Reference: Salesforce Docs – @TestVisible Annotation

✅ Final Answer:

B. Use mast. T=RunningTes={} before making the callout to bypass it in test execution

Given the following information regarding Universal Containers (UC):

* UC represents their customers as Accounts in Salesforce.

* All customers have a unique Customer__Number_c that is unique across all of UC's

systems.

* UC also has a custom Invoice c object, with a Lookup to Account, to represent invoices

that are sent out from their external system.

UC wants to integrate invoice data back into Salesforce so Sales Reps can see when a

customer pays their bills on time.

What is the optimal way to implement this?

A. Ensure Customer Number cis an External ID and that a custom field Invoice Number cis an External ID and Upsert invoice data nightly.

B. Use Salesforce Connect and external data objects to seamlessly import the invoice data into Salesforce without custom code.

C. Create a cross-reference table in the custom invoicing system with the Salesforce Account ID of each Customer and insert invoice data nightly,

D. Query the Account Object upon each call to insert invoice data to fetch the Salesforce ID corresponding to the Customer Number on the invoice.

Explanation:

To determine the optimal way for Universal Containers (UC) to integrate invoice data into Salesforce so Sales Reps can see when customers pay their bills on time, we need a solution that efficiently links invoices from an external system to the corresponding Salesforce Accounts, given the unique Customer__Number__c field and the Invoice__c object with a Lookup to Account. The solution should minimize custom code, ensure data integrity, and support nightly data imports. Let’s evaluate the options based on Salesforce integration best practices.

✔ UC uses Customer__Number__c as a unique identifier across all systems, making it a key for matching external invoice data to Salesforce Accounts.

✔ The Invoice__c object has a Lookup to Account, requiring the Salesforce Account ID to establish the relationship.

✔ The integration should handle large datasets (implied by "nightly" imports) and avoid performance issues like excessive SOQL queries.

A. Ensure Customer Number__c is an External ID and that a custom field Invoice Number__c is an External ID and Upsert invoice data nightly.

This approach designates Customer__Number__c on the Account object and a new Invoice_Number__c on the Invoice__c object as External IDs. An External ID enables upsert operations to match and update records using these unique fields instead of Salesforce IDs. The nightly upsert can use Customer__Number__c to link invoices to Accounts and Invoice_Number__c to identify or create Invoice__c records. This minimizes SOQL queries, supports bulk operations, and ensures data integrity with minimal custom code (e.g., via Data Loader or an ETL tool), making it highly efficient and optimal.

B. Use Salesforce Connect and external data objects to seamlessly import the invoice data into Salesforce without custom code.

Salesforce Connect with external objects allows real-time access to external data without importing it into Salesforce, using OData or other protocols. While this avoids custom code and nightly imports, it requires the external system to expose invoice data via a supported adapter and does not store the data locally for Sales Reps to view payment status historically. This approach is less suitable for tracking payment timeliness, which implies a need for persisted data, making it suboptimal.

C. Create a cross-reference table in the custom invoicing system with the Salesforce Account ID of each Customer and insert invoice data nightly.

This solution involves maintaining a cross-reference table in the external system to map Customer__Number__c to Salesforce Account IDs, then using this to insert Invoice__c records nightly. This requires ongoing synchronization of Account IDs in the external system, adding complexity and potential for data mismatch if Accounts are updated in Salesforce. It also relies on custom integration logic to perform the insert, which is less efficient than leveraging Salesforce’s upsert capability, making this less optimal.

D. Query the Account Object upon each call to insert invoice data to fetch the Salesforce ID corresponding to the Customer Number on the invoice.

This approach queries the Account object for each invoice insert to match Customer__Number__c to the Account ID, then sets the Invoice__c Lookup. For a nightly batch, this results in numerous SOQL queries (one per invoice), risking governor limits (100 SOQL queries per transaction) and poor performance with large datasets. This is inefficient compared to using External IDs, making it the least optimal solution.

Correct Answer: A. Ensure Customer Number__c is an External ID and that a custom field Invoice Number__c is an External ID and Upsert invoice data nightly.

Reason:

✔ Designating Customer__Number__c as an External ID on Account and adding Invoice_Number__c as an External ID on Invoice__c allows upsert operations to match records using these unique identifiers, eliminating the need for SOQL queries.

✔ The nightly upsert can process thousands of invoices efficiently, linking them to Accounts via Customer__Number__c and creating or updating Invoice__c records with Invoice_Number__c, ensuring Sales Reps see payment status.

✔ This approach leverages Salesforce’s built-in upsert functionality (e.g., via Data Loader, MuleSoft, or Apex), minimizing custom code and optimizing performance for large-scale integration.

Implementation Notes:

✔ Set Customer__Number__c as an External ID field on the Account object (via Setup > Object Manager > Account > Fields & Relationships).

✔ Add Invoice_Number__c as a custom field on Invoice__c, mark it as an External ID, and ensure it’s unique.

✔ Use an ETL tool or Apex batch job with Database.upsert() to process the nightly CSV or API feed, mapping Customer__Number__c to Account and Invoice_Number__c to Invoice__c.

✔ Include payment date fields (e.g., Payment_Date__c) in the invoice data to track timeliness.

✔ Test with a sample dataset in a sandbox to verify matching and upsert behavior.

Reference: Salesforce Integration Guide - External ID Fields, Salesforce Data Loader Guide - Upsert Operations.

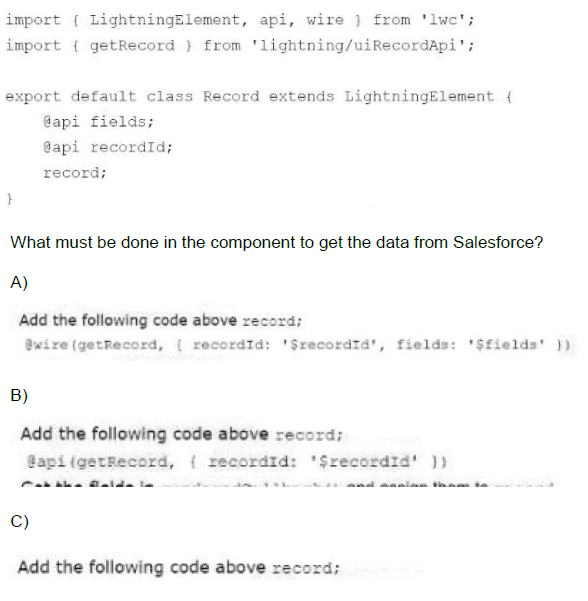

A developer is building a Lightning web component that retrieves data from Salesforce and assigns it to the record property.

A. Option A

B. Option B

C. Option C

Explanation:

To retrieve data from Salesforce in a Lightning Web Component (LWC), the component must use the @wire adapter with the getRecord function from lightning/uiRecordApi. This pattern is declarative and reactive, meaning the component automatically re-runs the wire adapter whenever the reactive parameters (like recordId or fields) change.

✅ Correct Answer: A.

This is the correct syntax for retrieving record data using the @wire service:

@wire(getRecord, { recordId: '$recordId', fields: '$fields' })

record;

@wire decorator wires the getRecord function to the record property.

The recordId and fields are reactive variables (note the $), meaning if their values change, the wire function re-executes.

This is the standard and recommended way to retrieve record data in LWC using the UI Record API.

❌ B.

@api(getRecord, { recordId: '$recordId' })

This syntax is invalid for two reasons:

@api is used to expose a property or method to the parent component — it cannot be used to call Apex or UI APIs.

The syntax tries to call getRecord improperly — it doesn’t use @wire and doesn’t pass required parameters correctly.

❌ C.

C is incomplete — it doesn't provide any code, so it can't retrieve data from Salesforce.

📚 Reference:

LWC Docs: Get Record Data with Lightning Data Service

getRecord adapter

✅ Final Answer:

A. Option A

| Salesforce-Platform-Developer-II Exam Questions - Home | Previous |

| Page 3 out of 41 Pages |