Last Updated On : 20-Feb-2026

Salesforce Certified Platform Developer II (SP25) Practice Test

Prepare with our free Salesforce Certified Platform Developer II (SP25) sample questions and pass with confidence. Our Salesforce-Platform-Developer-II practice test is designed to help you succeed on exam day.

Salesforce 2026

Which two queries are selective SOQL queries and can be used for a large data set of

200,000 Account records?

(Choose 2 answers)

A. SELECT Id FROM Account WHERE Name LIKE '!-NULL

B. SELECT Id FRCM Account WHERE Name != ’ ’

C. SELECT Id FRCM Account WHEP Name IN (List of Names) AND Customer_Number_c= 'ValueA

D. SELECT Id FROM Account WHERE II IK (List of Account Ida)

D. SELECT Id FROM Account WHERE II IK (List of Account Ida)

Explanation:

To determine which SOQL queries are selective, we must understand what Salesforce means by a selective query, especially in the context of large data volumes (like 200,000+ records):

🔎 What is a Selective SOQL Query?

A selective query is one that filters records efficiently using indexed fields or a small, targeted data set, avoiding full-table scans and performance issues. Salesforce requires selective queries for operations like queries in triggers on large objects (more than 100,000 records), otherwise you'll hit non-selective query errors.

A query is usually selective if:

✔ It filters on indexed fields (like Id, Name, CreatedDate, lookup/master-detail fields, custom fields marked as External ID or Unique).

✔ The filter condition reduces the result set significantly (less than 10% for standard objects, 30% for custom).

✔ It uses selective operators: =, IN, <, >, not broad ones like !=, LIKE, etc.

✅ Correct Answers: C and D

✅ C. SELECT Id FROM Account WHERE Name IN (List of Names) AND Customer_Number__c = 'ValueA'

✔ Name is a standard indexed field.

✔ Customer_Number__c is a custom field, and if it's a Unique or External ID, it is also indexed.

✔ The use of IN with a short list (like under 100 values) is selective.

✔ The AND condition combining indexed fields makes the query selective.

✅ This is a good example of a selective query.

✅ D. SELECT Id FROM Account WHERE Id IN (List of Account Ids)

✔ Id is always indexed and highly selective.

✔ Using IN with a reasonable number of values (e.g., 100 or fewer) is very efficient.

✔ Salesforce even recommends this pattern for bulk-safe queries.

✅ This is definitely selective and scalable for large datasets.

❌ A. SELECT Id FROM Account WHERE Name LIKE '%-NULL'

➟ LIKE queries using wildcards at the beginning (e.g., %text) are not selective.

➟ This forces a full scan of all records to find matches.

➟ Even though Name is indexed, wildcards at the beginning disable the index.

❌ This is non-selective and dangerous for large data volumes.

❌ B. SELECT Id FROM Account WHERE Name != ''

➟ The != (not equal to) operator is not selective in Salesforce.

➟ It causes a full table scan, as it must check every record.

➟ Even if Name is indexed, the != operator disables index usage.

❌ This is non-selective and risky on objects with 200k+ records.

✅ Final Answer:

C and D are the only selective queries among the options provided.

They follow Salesforce best practices for large data sets and governor limits.

🔗 Reference: Salesforce Developer Guide – Selective Queries and Query Optimization

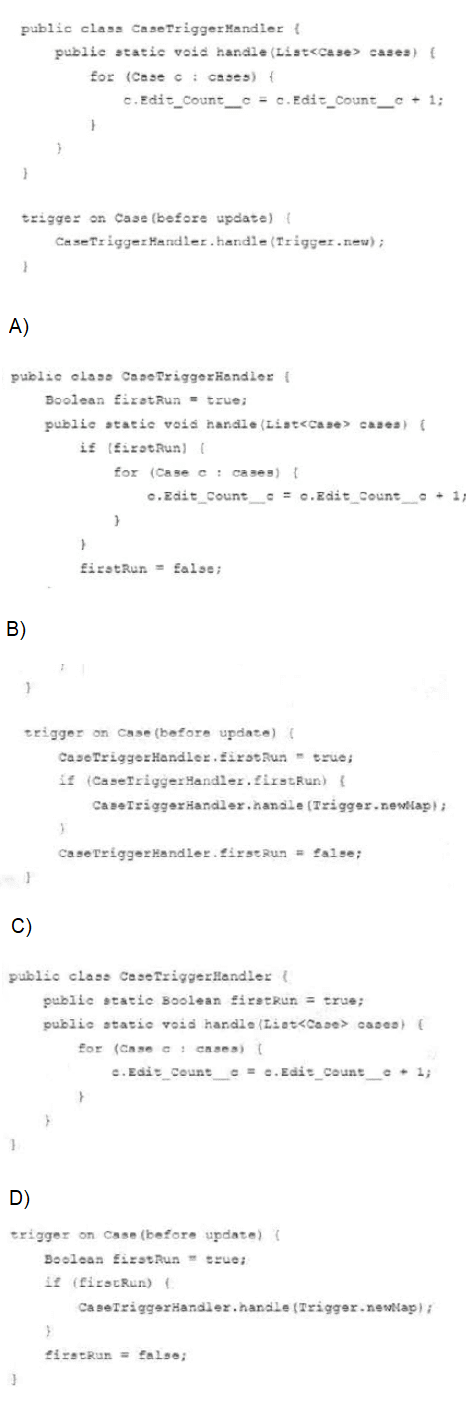

An Apex trigger and Apex class increment a counter, Edit __C, any time the Case is changed.

A. Option A

B. Option B

C. Option C

D. Option D

Explanation:

To determine the correct option for incrementing the Edit_Count__c field on a Case object whenever it is changed, we need to analyze the provided Apex trigger and class implementations. The trigger is a before update trigger on the Case object, and the goal is to ensure the Edit_Count__c field increments only once per update operation, avoiding multiple increments due to recursive trigger calls. Let’s evaluate each option step-by-step.

Trigger and Requirement Analysis

The trigger is defined as:

trigger on Case (before update) {

CaseTriggerHandler.handle(Trigger.new);

}

This trigger calls a static method handle in the CaseTriggerHandler class, passing the list of new Case records (Trigger.new). The handle method is responsible for incrementing the Edit_Count__c field. Since it’s a before update trigger, the field can be modified directly on the records in Trigger.new without requiring a separate DML operation. However, a common challenge with triggers is recursion—e.g., updating the record within the trigger could cause it to fire again, leading to unintended multiple increments. We need a mechanism to prevent this, ensuring the counter increments only once per update.

Evaluating the Options

Option A:

Analysis: This implementation simply loops through the cases and increments Edit_Count__c by 1 for each record. However, it lacks any recursion control. If the trigger update causes another update (e.g., via a workflow or process), the trigger will re-execute, incrementing the counter multiple times. This does not meet the requirement of a single increment per change.

Verdict: Incorrect due to potential recursion issues.

Option B:

Analysis: This approach uses a firstRun Boolean to control execution. The trigger sets firstRun = true before calling handle, and the handle method checks this flag to perform the increment only if firstRun is true, then sets it to false. However, there are issues:

➜ firstRun is an instance variable in the class, but the handle method is static. Static methods cannot directly access instance variables unless they are also static or passed as parameters, which isn’t done here. This code would not compile due to this mismatch.

➜ Even if made static (e.g., static Boolean firstRun), the trigger and class logic would need to align properly. The current setup has redundant checks and could still fail if multiple updates occur in the same transaction context, as firstRun would reset with each trigger invocation.

Verdict: Incorrect due to compilation errors and ineffective recursion control.

Option C:

Analysis: This version declares firstRun as a static Boolean initialized to true but does not use it in the handle method to control execution. The handle method always increments Edit_Count__c for each case, with no recursion prevention. Like Option A, this risks multiple increments if the trigger fires again due to a subsequent update. The firstRun variable is unused, making this implementation incomplete.

Verdict: Incorrect due to lack of recursion control.

Option D:

Analysis: Here, the firstRun Boolean is defined within the trigger context, set to true initially. The if (firstRun) condition ensures the handle method runs only once per trigger execution, and firstRun is set to false afterward. Since it’s a local variable in the trigger, it resets with each new transaction but persists within a single trigger invocation. This prevents recursion within the same update operation—e.g., if a workflow updates the record, the trigger won’t re-execute the increment logic. The handle method then safely increments Edit_Count__c for each case. This approach effectively limits the increment to once per update.

Verdict: Correct, as it prevents recursion and ensures a single increment per change.

Conclusion:

The requirement is to increment Edit_Count__c once per Case update, avoiding multiple increments due to recursion. Option D uses a local firstRun Boolean in the trigger to control execution, ensuring the handle method runs only once per update. Options A and C lack recursion control, while Option B has a compilation error due to the instance variable mismatch. Thus, the correct answer is:

D. Option D

A company has an Apex process that makes multiple extensive database operations and web service callouts. The database processes and web services can take a long time to run and must be run sequentially. How should the developer write this Apex code without running into governor limits and system limitations?

A. Use Queueable Apex to chain the jobs to run sequentially.

B. Use Apex Scheduler to schedule each process.

C. Use multiple 3zutuze methods for each process and callout.

D. Use Limits class to stop entire process once governor limits are reached.

Explanation:

To answer this question effectively, we must evaluate how to handle long-running, sequential operations (both database operations and web service callouts) in Apex without hitting governor limits, such as:

➜ The maximum CPU time (10,000 ms)

➜ The maximum number of callouts per transaction (100)

➜ The maximum number of DML statements (150)

Salesforce imposes strict governor limits to ensure multitenancy and performance. So to handle large, sequential operations, we need an architecture that breaks the work into manageable units, supports asynchronous execution, and allows chaining for sequential execution.

✅ Correct Answer: A. Use Queueable Apex to chain the jobs to run sequentially.

✅ Explanation:

Queueable Apex is ideal for running complex or long-running logic asynchronously. It allows you to:

➜ Perform large DML operations and web service callouts (which are allowed in Queueable jobs).

➜ Chain additional jobs using the System.enqueueJob() call from the execute() method.

➜ Maintain state between chained jobs using constructor parameters or custom logic.

This makes it perfect for sequential processing, where you need to perform one step, then move to the next only after completion (like billing → tax calculation → notification). You can organize each major step into a separate Queueable class and enqueue the next from within the current.

public class StepOne implements Queueable {

public void execute(QueueableContext context) {

// Do step one

System.enqueueJob(new StepTwo()); // Chain next step

}

}

🔗 Reference: Salesforce Docs – Queueable Apex

❌ B. Use Apex Scheduler to schedule each process.

The Apex Scheduler is designed for time-based or recurring operations, not for coordinating sequential steps within the same business process. Also, Scheduler jobs cannot be chained dynamically, so it’s not suitable for handling multi-step workflows that depend on the completion of prior steps.

❌ C. Use multiple @future methods for each process and callout.

@future methods are limited: no chaining, no guarantee of order, and only one web service callout per method.

@future also does not support returning results, making coordination harder.

Deprecated in favor of Queueable in many use cases.

❌ D. Use Limits class to stop entire process once governor limits are reached.

The Limits class allows you to check current resource usage, but does not prevent governor limits from being hit — it can only be used for fallback logic. Also, stopping the process mid-execution leaves the business process incomplete. This is more of a monitoring tool, not a control mechanism for architecture.

✅ Final Verdict:

A. Use Queueable Apex to chain the jobs to run sequentially is the best approach. It supports long-running, callout-heavy, and sequential processes while adhering to Salesforce governor limits.

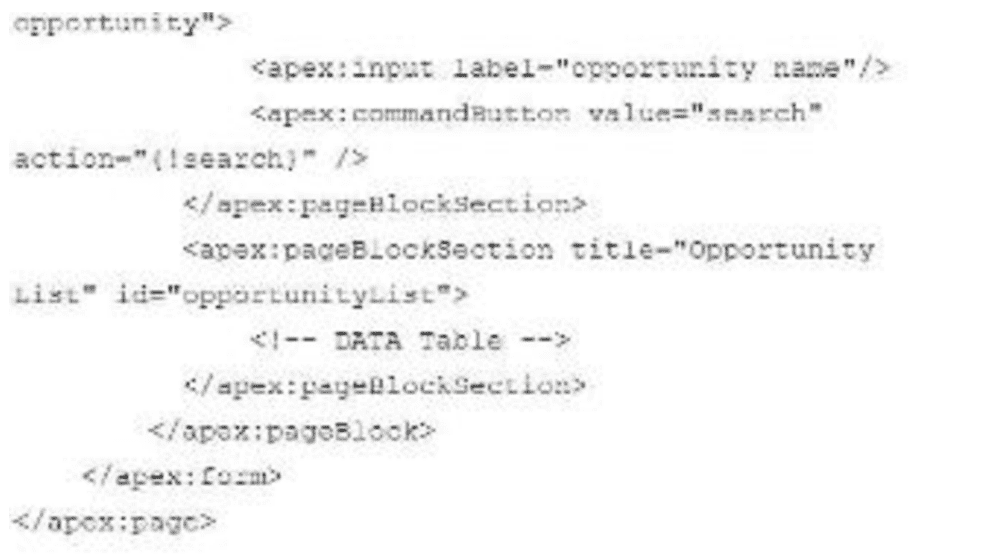

Refer to the exhibit:

Users of this Visualforce page complain that the page does a full refresh every time the

Search button Is pressed.

What should the developer do to ensure that a partial refresh Is made so that only the

section identified with opportunity List is re-drawn on the screen?

A. Enclose the DATA table within the

B. Implement the render attribute on the

C. Ensure the action method search returns null.

D. Implement the

Explanation:

To address the issue of the Visualforce page performing a full refresh every time the Search button is pressed, the goal is to enable a partial refresh, updating only the section identified as opportunityList (the

Understanding the Problem

The Visualforce page includes an

Evaluating the Options

A. Enclose the DATA table within the

➜ What it does: The

➜ Impact: Wrapping the

➜ Verdict: This alone doesn’t solve the partial refresh issue.

B. Implement the render attribute on the

➜ What it does: The rerender attribute (likely a typo in the option as "render") on

➜ Impact: When the Search button is clicked, the search action method runs, and only the component with id="opportunityList" (the

➜ Verdict: This directly addresses the requirement for a partial refresh.

C. Ensure the action method search returns null

➜ What it does: In Visualforce, an action method that returns null keeps the user on the same page after the action completes, rather than navigating to a new page. For example:

apexpublic PageReference search() {

// Logic to populate opportunityList

return null;

}

➜ Impact: Returning null prevents navigation but doesn’t inherently trigger a partial refresh. Without a rerender attribute on the

➜ Verdict: This doesn’t ensure a partial refresh by itself.

D. Implement the apex:commandbutton tag with immediate=true

➜ What it does: The immediate=true attribute on apex:commandbutton skips validation and field update phases, processing the action immediately. For example:

xml apex:commandbutton value="Search" action="{!search}" immediate="true"

➜ Impact: This bypasses input validation and updates, which might be useful in some cases, but it doesn’t control the rendering process. Without a rerender attribute, the button still causes a full page refresh. The immediate attribute is unrelated to partial page updates.

➜ Verdict: This doesn’t achieve the desired partial refresh.

Conclusion:

The key to a partial refresh in Visualforce is using the rerender attribute on the apex:commandbutton to specify the id of the section to update (in this case, opportunityList). This leverages AJAX to refresh only the

Thus, the developer should:

B. Implement the render attribute on the apex:commandButton tag

Which use case can be performed only by using asynchronous Apex?

A. Querying tens of thousands of records

B. Making a call to schedule a batch process to complete in the future

C. Calling a web service from an Apex trigger

D. Updating a record after the completion of an insert

Explanation:

To determine which use case can only be performed using asynchronous Apex in Salesforce, let's evaluate each option based on the nature of synchronous and asynchronous processing in Apex.

Asynchronous Apex refers to operations that are executed in the background, separate from the main transaction, allowing time-consuming tasks to run without delaying the user experience or hitting governor limits. Examples include Batch Apex, Queueable Apex, Scheduled Apex, and Future methods. Synchronous Apex, on the other hand, executes immediately within the current transaction, such as in triggers or standard controller actions.

Option A: Querying tens of thousands of records

In Salesforce, SOQL queries in a synchronous context can retrieve up to 50,000 records within a single transaction, subject to governor limits. For example, you can use a SOQL query in a trigger or Visualforce controller to fetch tens of thousands of records synchronously, as long as the limit isn't exceeded. However, if the dataset exceeds 50,000 records or requires processing that might hit other limits (e.g., CPU time), asynchronous methods like Batch Apex, which uses a QueryLocator, become necessary. Since querying tens of thousands of records (assuming within the 50,000 limit) can be done synchronously, this use case does not require asynchronous Apex.

Option B: Making a call to schedule a batch process to complete in the future

Scheduling a batch process to run at a future time involves using the System.schedule method or implementing the Schedulable interface in Apex. This allows you to define a job that executes at a specified time, such as running a batch process overnight. By definition, scheduling a task to occur later is an asynchronous operation—there’s no way to "schedule" something to run in the future within a synchronous context, as synchronous code executes immediately. Other mechanisms, like time-based workflows, can’t schedule Apex batch processes; they are limited to actions like field updates or emails. Thus, this use case can only be performed using asynchronous Apex.

Option C: Calling a web service from an Apex trigger

Apex triggers are synchronous by default, executing immediately before or after a database operation. You can make a synchronous web service callout from a trigger using HTTP classes (e.g., HttpRequest), provided the callout completes within the transaction’s governor limits (e.g., 10 seconds of callout time). However, because callouts can be slow or unreliable, best practices recommend using asynchronous Apex (e.g., a Future method or Queueable Apex) to handle them, avoiding delays in the trigger. Despite this, synchronous callouts are technically allowed and possible, so this use case does not require asynchronous Apex.

Option D: Updating a record after the completion of an insert

This is a common scenario handled by an after insert trigger. When a record is inserted, an after insert trigger can execute synchronously to update the same record or related records within the same transaction. For example:

trigger MyTrigger on Account (after insert) {

List

for (Account a : Trigger.new) {

a.Description = 'Updated after insert';

accountsToUpdate.add(a);

}

update accountsToUpdate;

}

This operation is immediate and synchronous, completing before the transaction ends. Asynchronous Apex isn’t needed, as the update can occur in real-time within the trigger.

Conclusion:

A: Can be done synchronously up to 50,000 records, so asynchronous Apex isn’t required.

B: Scheduling a future batch process is inherently asynchronous and cannot be done synchronously.

C: Web service callouts from triggers can be synchronous, though asynchronous is preferred.

D: Updating after an insert is a synchronous trigger operation.

The only use case that can only be performed using asynchronous Apex is B. Making a call to schedule a batch process to complete in the future.

| Page 1 out of 41 Pages |