Salesforce-MuleSoft-Platform-Architect Practice Test

Updated On 1-Jan-2026

152 Questions

A Mule 4 API has been deployed to CloudHub and a Basic Authentication - Simple policy has been applied to all API methods and resources. However, the API is still accessible by clients without using authentication. How is this possible?

A. The APE Router component is pointing to the incorrect Exchange version of the APT

B. The Autodiscovery element is not present, in the deployed Mule application

C. No… for client applications have been created of this API

D. One of the application’s CloudHub workers restarted

Explanation:

The Basic Authentication - Simple policy is applied in API Manager (managed API instance). For policies to be enforced at runtime on a Mule application deployed to CloudHub, the application must be linked to the managed API instance via API Autodiscovery.

The Autodiscovery global element (<api-platform-gw:autodiscovery>) in the Mule XML configuration tells the runtime to connect to API Manager, discover the associated API instance (by apiId), and dynamically apply all configured policies (including Basic Authentication - Simple).

If Autodiscovery is missing or misconfigured in the deployed Mule application, the runtime treats the application as standalone/ungoverned. No policies from API Manager are applied, even though they appear configured in the console.

As a result, the API endpoints are accessible without any authentication, exactly as described.

Why not the other options?

A. The API Router component is pointing to the incorrect Exchange version: API Router (for implementing APIs) is unrelated to policy enforcement. Wrong asset version might cause design-time issues, but not bypass runtime policies.

C. No client applications have been created for this API: Basic Authentication - Simple does not require client applications or contracts (unlike Client ID Enforcement or OAuth policies). It prompts for username/password on every request, regardless of client registration.

D. One of the application’s CloudHub workers restarted: Worker restarts do not disable policy enforcement. Autodiscovery reconnects on startup, and policies remain applied across all workers.

References:

MuleSoft Documentation: API Autodiscovery – Required for policy application in managed APIs.

Common troubleshooting: "Policies not applied" → check Autodiscovery configuration and apiId.

Architect and developer certification materials highlight missing Autodiscovery as the primary reason policies fail to enforce on CloudHub deployments.

A team is planning to enhance an Experience API specification, and they are following API- led connectivity design principles.

What is their motivation for enhancing the API?

A. The primary API consumer wants certain kinds of endpoints changed from the Center for Enablement standard to the consumer system standard

B. The underlying System API is updated to provide more detailed data for several heavily used resources

C. An IP Allowlist policy is being added to the API instances in the Development and Staging environments

D. A Canonical Data Model is being adopted that impacts several types of data included in the API

Explanation:

In MuleSoft’s API-led connectivity approach:

Experience APIs are designed to present data tailored to specific consumers (mobile apps, web apps, partner systems).

Enhancements to an Experience API specification are typically driven by changes in how data should be represented or consumed.

A Canonical Data Model (CDM) defines a standardized representation of data across the organization. When a CDM is adopted, Experience APIs must be updated to align with this model, ensuring consistency and interoperability across consumers.

This is a textbook motivation for enhancing an Experience API specification: aligning the API’s payloads and resources with the new canonical model.

❌ Why not the other options?

A. Changing endpoints to consumer system standard ❌

Incorrect. Experience APIs should follow organizational standards (like C4E guidelines), not be customized to individual consumer systems. That would break reuse and governance principles.

B. System API updated with more detailed data ❌

Incorrect. This impacts Process APIs (which orchestrate System APIs), not Experience APIs. Experience APIs are consumer-facing and don’t directly expose raw System API changes.

C. Adding IP Allowlist policy ❌

Incorrect. Policies are applied at the API instance level in API Manager, not by changing the API specification. This is a runtime governance change, not a spec enhancement.

🔗 References:

MuleSoft Docs: API-led Connectivity

MuleSoft Best Practices: Canonical Data Model adoption in API-led design

A Rate Limiting policy is applied to an API implementation to protect the back-end system. Recently, there have been surges in demand that cause some API client

POST requests to the API implementation to be rejected with policy-related errors, causing delays and complications to the API clients.

How should the API policies that are applied to the API implementation be changed to reduce the frequency of errors returned to API clients, while still protecting the back-end

system?

A. Keep the Rate Limiting policy and add 9 Client ID Enforcement policy

B. Remove the Rate Limiting policy and add an HTTP Caching policy

C. Remove the Rate Limiting policy and add a Spike Control policy

D. Keep the Rate Limiting policy and add an SLA-based Spike Control policy

Explanation:

Rate Limiting is already protecting the backend, but it’s hard-rejecting bursts, which is why clients see policy errors during demand surges. The best change is to keep the long-term protection (Rate Limiting) and add a policy that smooths short-term spikes (Spike Control), ideally SLA-based so different clients can have different spike tolerances.

Why D works best

1) Rate Limiting protects the backend over time

Rate Limiting enforces a quota per time window (per client, if SLA-based). Once the quota is reached, further requests are rejected (often HTTP 429). This protects the backend from sustained overuse.

2) Spike Control reduces errors during short surges

Spike Control is designed for traffic bursts. Instead of immediately rejecting requests when a threshold is exceeded, Spike Control can reattempt/delay requests briefly (burst smoothing). That reduces the number of immediate policy rejections clients see during short-lived surges, while still limiting backend load.

3) SLA-based Spike Control aligns with real client behavior

Making Spike Control SLA-based allows:

- premium clients to tolerate higher burst rates

- standard clients to have stricter burst limits

This reduces unnecessary errors for high-value clients while maintaining overall system protection.

Why the other options are not as good

A (add Client ID Enforcement) ❌

Client ID enforcement controls who can call the API, not how much traffic gets through. It doesn’t solve burst-related rejections.

B (replace with HTTP Caching) ❌

Caching generally benefits GET requests. Your problem is POST requests being rejected; caching won’t help and doesn’t protect write operations the same way.

C (replace Rate Limiting with Spike Control) ❌

Spike Control handles short bursts but is not a substitute for rate limiting quotas over longer periods. Removing Rate Limiting weakens sustained-load protection.

✅ Final answer: D

A business process is being implemented within an organization's application network. The architecture group proposes using a more coarse-grained application

network design with relatively fewer APIs deployed to the application network compared to a more fine-grained design.

Overall, which factor typically increases with a more coarse-grained design for this business process implementation and deployment compared with using a more fine- grained

design?

A. The complexity of each API implementation

B. The number of discoverable assets related to APIs deployed in the application network

C. The number of possible connections between API implementations in the application network

D. The usage of network infrastructure resources by the application network

Explanation:

Coarse-Grained Design: In this model, each API handles a broader range of business functions or data entities. Because a single API is doing "more"—such as managing multiple related actions (e.g., registration, login, and profile updates all in one "User API")—the internal code, logic flows, and error handling within that specific API become significantly more complex.

Encapsulated Logic: A coarse-grained API often acts like a "mini-monolith." It must contain the logic to route different types of requests, handle various backend system interactions, and manage complex data transformations internally, rather than delegating those tasks to separate, simpler APIs.

Reduced Network Overhead: While individual API complexity increases, the overall network overhead typically decreases because there are fewer total components to manage and fewer "hops" across the network to complete a business process.

🔴 Incorrect Answers

B. The number of discoverable assets: This decreases with a coarse-grained design. Since you are bundling functionality into fewer APIs, there will be fewer individual assets (API specifications, fragments, etc.) published in Anypoint Exchange.

C. The number of possible connections: This also decreases. A fine-grained (microservices) approach creates a "web" of many small APIs talking to each other, leading to a high number of connections. A coarse-grained design results in fewer, more isolated nodes with fewer inter-dependencies.

D. The usage of network infrastructure resources: This generally decreases. Fine-grained architectures "gobble up" resources like memory and CPU at a faster pace because each small API requires its own runtime overhead (e.g., a dedicated CloudHub worker or replica). Coarse-grained APIs consolidate these functions, leading to more efficient use of infrastructure at the cost of less granular scaling.

📚 Reference

MuleSoft Blog: Fine-grained vs. Coarse-grained APIs

Key Concept:

The Complexity Trade-off. If you want to limit management overhead and infrastructure costs, go coarse-grained. If you want maximum reusability, agility, and granular security, go fine-grained. The "sweet spot" usually involves finding the right balance where an API is small enough to be understood but large enough to provide a meaningful business service.

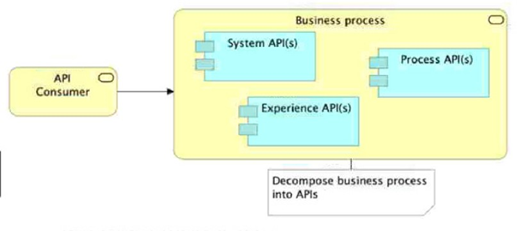

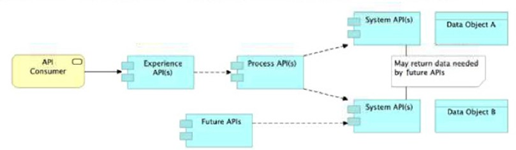

Refer to the exhibits.

Which architectural constraint is compatible with the API-led connectivity architectural style?

A. Always use a tiered approach by creating exactly one API for each of the three layers (Experience, Process, and System)

B. Use a Process API to-orchestrate calls to multiple System APIs but not to other Process APIs:

C. Allow System APIs to return data that is not currently required by the identified Process or Experience APIs

D. Handle customizations for the end-user application at the Process layer rather than at the Experience layer

Explanation:

Why B is compatible with API-led connectivity

API-led connectivity encourages clear separation of concerns across layers:

System APIs: encapsulate access to systems of record (DBs, SaaS, legacy)

Process APIs: implement business processes by orchestrating and composing data/capabilities from System APIs

Experience APIs: tailor the interface for specific consumers (web, mobile, partners)

A key architectural constraint that keeps the network clean and reusable is: Process APIs orchestrate System APIs, and you avoid building long chains of Process→Process calls unless there’s a very strong reason. That reduces coupling, prevents “process spaghetti,” and makes each Process API map cleanly to a business capability.

Why the other options are not compatible

A ❌ “Exactly one API per layer” is not API-led. Real networks have many Experience, Process, and System APIs depending on consumers and systems.

C ❌ System APIs should provide stable, reusable system access, but they should not return “extra data just in case.” That leads to overexposure, larger payloads, and weaker contracts.

D ❌ Consumer-specific customization belongs in the Experience layer, not the Process layer. Process APIs should remain consumer-agnostic to maximize reuse.

| Salesforce-MuleSoft-Platform-Architect Exam Questions - Home | Previous |

| Page 4 out of 31 Pages |